- cryptography

Introduction to the Sum-Check Protocol

Sam Green •

Sam Green • TL;DR

It is expensive to run transactions on the Ethereum EVM. Verifiable computing (VC) lets us outsource computing away from the EVM. Today, a popular and exciting form of VC algorithm is the SNARK. There are various families of SNARKs that use the sum-check protocol, which is a simple algorithm to introduce VC. This is a tutorial on the sum-check protocol. This post is focused on how the sum-check protocol is implemented - it does not go into theory. You can skip straight to the finished code here. Thank you to Gokay Saldamli, Gabriel Soule, and Tomasz Kornuta for providing valuable feedback on this article.

Background

Executing code on Ethereum is expensive. Verifiable computing algorithms promise a way to reduce costs, by outsourcing computing to untrusted parties and only verifying the result on-chain. A key point about useful verifiable computing algorithms is that the verification must be less expensive than the original computation. The sum-check protocol is a foundational algorithm in the field of verifiable computing. In a stand-alone setting, sum-check is not particularly useful. However, it is an important building block of more sophisticated and useful SNARK algorithms. This tutorial introduces sum-check as a way to familiarize the reader with a relatively simple verifiable computing algorithm.

The sum-check protocol is used to outsource the computation of the following sum:

The sum-check protocol allows a Prover to convince a Verifier that computed the sum correctly. You can assume that has ample computational power or ample time to compute, e.g., it could be an untrusted server somewhere on the internet, and is has limited computational power, e.g., it could be the EVM.

Why would you want to sum a function over all Boolean inputs, as is done in the equation above? One reason would be if you wanted to provide an answer to the #SAT (“sharp sat”) problem. #SAT is concerned with counting the number of inputs to a Boolean-valued function that result in a true output. For example, what is the #SAT of ? It is 1, because only a single input, , , , results in 1 being output from . You can probably see that it would be computationally intensive for you to solve #SAT for complex problems. With the sum-check protocol, can do that work instead of you.

Unless you are a complexity theorist, you may not get too excited about the #SAT problem at first glance. However, more practical problems can be mapped to #SAT, and then sum-check can be used. For example, given an adjacency matrix, you can use sum-check to solve for how many triangles are connected. You can also use sum-check to outsource matrix multiplication. Check out the book mentioned next for more details.

In this tutorial, we use the same example as given in Justin Thaler’s book: Proofs, Arguments, and Zero-Knowledge. In Justin’s book, sum-check is used to solve #SAT for the following example function:

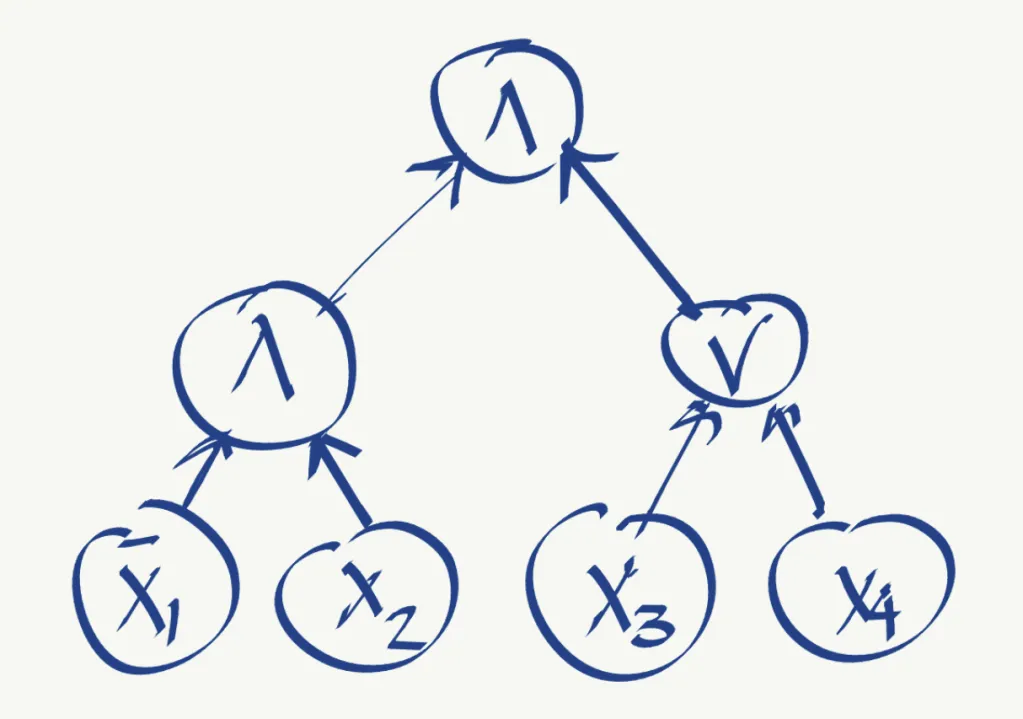

where means NOT . The function (phi) can also be represented graphically as:

In the graph of , we see the logic symbols for AND () and OR (). Note that is only defined over the Boolean inputs (0/1) and that has a Boolean output. However, most popular verifiable computing algorithms only support arithmetic operations, so we need to convert to its arithmetic version. The arithmetized version is called . For our example, is defined as:

and visualized as:

In , notice that the Boolean functions: NOT, AND, OR have been compiled into their arithmetic equivalents. Take a moment to convince yourself that if you pick Boolean values for , , , and , that and will output the same thing.

For reference, here are the specific compiler rules used when converting into :

| Boolean gate | Arithmetized version |

|---|---|

| A AND B | A*B |

| A OR B | (A+B)-(A*B) |

| NOT A | 1-A |

Notice that has extended the domain of our original Boolean formula and is now valid for Boolean inputs and integers. The facts that equals when evaluated at Boolean inputs and that integers are valid inputs to are leveraged by the sum-check protocol.

The sum-check protocol

This section introduces the sum-check protocol. We will follow the same steps and naming convention as used in Justin’s book. The protocol steps are summarized as:

-

Prover calculates the total sum of evaluated at all Boolean inputs

-

computes a partial sum of , leaving the first variable free

-

Verifier checks that the partial sum and total sum agree when the partial sum is evaluated at 0 and 1 and its outputs added

-

picks a random number for the free variable and sends it to

-

replaces the free variable with the random number and computes a partial sum leaving next variable free

-

and repeat steps similar to 3—5 for the rest of the variables:

-

evaluates at one input using access to an “oracle”, i.e., must make a singled trusted execution of

If makes it through Step 7 without error, then accepts ‘s proof. Otherwise, rejects.

We expand the steps of the algorithm in the following sections.

1. calculates the total sum of evaluated at all Boolean inputs

is the sum described in the first equation in this article. In round zero, should perform the summation described in the first equation. assigns this sum to and returns it to . Note that this sum is the #SAT solution, i.e., it gives the answer of how many unique inputs to the function result in 1 being output from . The remainder of the sum-check algorithm is designed to ensure that performed the #SAT solution correctly.

In our example, will be returned in Step 1. The following table shows ‘s work where the must iterate over all possible Boolean combinations of ,, , and and evaluate :

The outputs of the above evaluations are added to arrive at .

2. computes a partial sum of , leaving the first variable free

In Step 2, computes a similar sum as they did in Step 1, except the first variable is left free/unassigned. I.e.,

Note that is a monomial in , and all other variables have been assigned values in each iteration of the loop. We refer to this monomial as the “partial sum” in the next step. Also observe that the only difference between the definition for and the definition for is that the summation is missing .

For our example, . To calculate , we leave free and then calculate the sum of evaluated at all possible Boolean combinations assigned to , , and . The following table contains the intermediate calculations:

After summing all the rows in the table, we get .

3. checks that the partial sum and total sum agree when the partial sum is evaluated at 0 and 1 and its outputs added

In Step 3, checks that was consistent with what they committed in Step 0 and Step 1. Specifically, checks the following:

In our example, sent and to during Steps 1 and 2, respectively. So must now check that , which it does. If this check failed, then would end the protocol, and ‘s claim for (which is the supposed answer to the #SAT problem) would be rejected.

Note here what is happening: in Step 1, made a commitment to the #SAT answer. In Step 2, also did almost all of the work for , except still needed to evaluate the monomial , at two points: and . This is much less work than what must do.

Observe that at this point, doesn’t know whether the polynomial being evaluated by is actually the polynomial of interest, . At this point, only knows has been consistent with the polynomial they have used. In fact, everything until Step 7 is only used to verify the consistency of ‘s answers. Step 7 resolves this uncertainty and is used to prove that has been both consistent and correct. This observation is one of the “slippery” aspects in understanding the sum-check protocol.

4. picks a random number for the free variable and sends it to

Before beginning this step, it’s time to introduce a security aspect of the protocol. When the protocol starts, tells what finite field they will be working with. This means that all numbers will be restricted (by using the modulus operator) to be between 0 and . For example, if is told that then will reduce everything by mod 16, e.g. and .

Next, picks a random number for to assign to . is selected from the finite field . For the running example in this article, we will pick . From here on, all example calculations will be performed mod 16.

For the remainder of the protocol, will always replace with . Note that something interesting is now happening. Up until this point, only evaluated with Boolean inputs, but now the protocol is leveraging the fact that because is a polynomial, it can be evaluated using arbitrary integers instead of only 0s or 1s. This is where the security of the protocol is derived: if is very large, it will be difficult for to guess the random challenges before receiving them.

For our running example, we will assume that selects .

5. replaces the free variable with the random number and computes a partial sum leaving next variable free

calculates the sum again, except now they replace with the random number :

For our example, near the end of Step 3, calculated . At the end of Step 4 above, we mention that selected . So replaces with , giving . returns to .

6. and repeat steps similar to 3—5 for the rest of the variables:

Steps 3 through 5 above constitute one “round” of the protocol. In total, if is the number of variables in , then rounds in total are required to complete the protocol. Each round includes the same operations as given in Steps 3 through 5 above, with the exception that different variables are left free each round.

For example, at the end of Step 5, returns to . If we apply the pattern in Step 3, noting that , we see that that must now check that:

So round 2 passes.

In our example, has four variables, so we will cycle through Steps 3—5 four times, then we move to Step 7.

7. evaluates at one input using access to an “oracle”, i.e., must make a singled trusted execution of

After round has successfully been checked, then has incorporated . The last step is for to select and use an oracle to evaluate . The oracle is a trusted source which will be guaranteed to evaluate correctly at a single input. Alternatively, if no oracle is available, then must compute once by itself. Without an oracle, would be required to store and compute — this could be costly.

After is provided by the oracle, or computed by itself, then checks:

If this check passes, then accepts ‘s claim that is equal to , or, in our example, that is the #SAT of .

Conclusions and further reading

Python code implementing sum-check can be found in this repo. We omitted security checks in this tutorial for brevity, but those checks are included in the linked repo. Also, this article did not explain why sum-check is secure, nor did it cover the practical costs of the algorithm. Briefly, the security of sum-check can be analyzed using the Schwartz–Zippel lemma — it is basically like random sampling used for quality control. Regarding the costs, to solve #SAT without the sum-check protocol, would have had to evaluate at inputs, but with sum-check, the cost for drops to steps of the protocol plus a single evaluation of . If you want more depth, see Justin’s book, which is linked above, and these resources:

-

Sum-check article by Justin Thaler: The Unreasonable Power of the Sum-Check Protocol

-

Sum-check notes by Edge & Node cryptographer, Gabriel Soule: https://drive.google.com/file/d/1tU50f-IpwPdCEJkZcA7K0vCr7nwwzCLh/view

If you have made it this far, you now hopefully have a feel for how interactive arguments of knowledge work. This is the first time we have mentioned “interactive”. Note that and had to communicate in each round. This is not ideal in scenarios where computers can go offline, i.e., the real world. The Fiat–Shamir transform makes sum-check non-interactive. As you may know, SNARKs also eliminate interactions, as implied by the “N” in the name which stands for “Non-interactive”. SNARKs also use Fiat-Shamir. Perhaps we will make a part 2 of this article where we implement a non-interactive version of sum-check.